I have seen many DFS implementations carried out by system admins without much planning very casually and subsequently found themselves caught up with issues.

One of the major issues found is either DFSR folder is not replicating or replicating very slowly with a high backlog. We can avoid known issues in DFSR by following the general best practices.

In this article, I am trying to cover DFS best practises/considerations in general from a deployment and Initial configuration standpoint.

Article applicability: Windows 2008 R2 / 2012 / 2012 R2 / 2016 server

Topics Covered:

- DFS server Sizing

- DFSR Hotfixes

- DFS Name Space Mode

- DFS Configuration & Server Placement

- Active Directory health and AD Sites configuration

- Source data permissions

- DFSR Staging Area

- Number of replicated folders per drive

- DFS-R Data Preseeding (pre-staging) and Cloning

- DFSR and roaming profiles

- Excluding File types from DFSR (Filters)

- RDC and DFSR

- Disable replicated member vs delete replicated member

- DFSR read-only replica

- DFSR Replication Schedule and Bandwidth

- Antivirus Exclusions

- DFSN & DFSR Backup and Restore

DFS Server Sizing

DFSR

Servers chosen for DFSR are also known as file servers. Typically, enterprise-class files servers should be properly designed with proper CPU, memory and storage along with a Gigabit network.

Servers can be either physical or virtual. Now a day’s virtual servers are more popular due to easy maintainability.

For a small scale to mid-sized DFSR implementation with a moderate user base and data in-outs:

- CPU should be an X64 bit with a minimum of 4 cores that is 2.5 GHz or higher

- A minimum 8 to 16 GB of memory should be installed and memory should be increased based on results obtained by perfmon from page file utilization, memory utilization, etc

- Disks used for storage should be SAS disks with a minimum 10K rpm with Raid-10 / Raid-5, 15K rpm disks are recommended, Enterprise-grade SSDs also can be used if affordable and available

- Data volumes should have enough space to handle replicated data along with staging data

- Gigabit Ethernet is recommended, if multiple network cards are available, teaming is also recommended

For large scale file servers which also acting as DFSR servers, proper server sizing needs to be designed based on the user base, data read-write frequency, storage sizing requirement, HA requirements and so on. Microsoft has provided a File server Capacity Tool but it’s complicated and not very practical to use.

For DFSR servers, physical servers give slightly better performance than virtual machines under load conditions since physical servers have dedicated resources and virtual servers are hosted on hypervisors, having other VM workloads, and thus using shared resources.

DFSN

DFS namespace servers can be installed on low capacity hardware as compared to DFSR servers. Microsoft has not provided any special hardware sizing for namespace servers.

If DFS namespace servers need to be installed on dedicated servers, you can start with virtual servers with 2 Core CPUs, 4 / 8 GB memory and can increase CPU / memory based on actual usage observations / perfmon results.

DFSR Hotfixes

Before rolling out DFS-N and DFS-R servers, install all latest windows updates along with DFS related hotfixes in advance to avoid any known / unknown issues in advance

Links are provided here

I have not found any lists for 2016 Servers yet.

DFS Name Space Mode

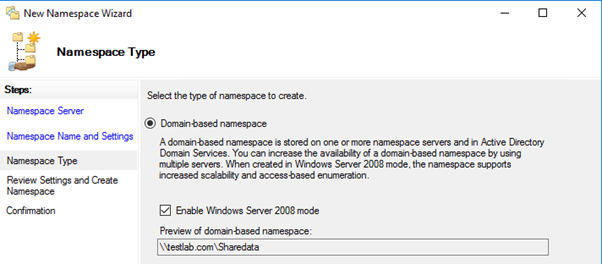

While installing DFS namespace (DFS-N) always select Windows server 2008 mode as the DFS operating mode. This mode enhances scalability and also access-based enumeration (ABE) can be used for DFS targets if enabled.

Active Directory prerequisites for 2008 server mode: Minimum Windows 2003 FFL, Minimum 2008 DFL, 2008 and the above namespace server.

Refer Microsoft document below for additional information

https://docs.microsoft.com/en-us/windows-server/storage/dfs-namespaces/choose-a-namespace-type

DFS Configuration & Server Placement

DFSR

DFSR is multi master replication engine. Ideally when deploying DFS Replication:

- DFSR topology needs to be designed based on your data access requirements across locations, storage requirements, available network bandwidth, user base, and the organisations backup strategy.

- Avoid installing DFSR roles on domain controllers since FRS is deprecated and the majority of DCs now run Sysvol under DFSR, separation helps to isolate DFSR related issues

- Avoid data modification on destination replicated folder until it completes initial replication and start replicating back and forth. Else as part of initial sync process, conflict is detected and modified data on destination will be renamed and moved to Preexisting directory under DFSR database folder which is inaccessible to users and administrators as well. hence allow users to modify data on primary target only until both folders start replicating back and forth.

- For any reason if DFSR replicated folder replication need to be stopped, change replication schedule to No Replication. Do not stop DFSR service. It may leads to DFSR database goes in journal wrap state and take much time to recover

- Microsoft does not recommend disabling connection object to disable one-way replication for replication groups. The only recommended way to achieve one-way replication is to use a read-only replica.

- DFSR supports multiple topologies such as mesh, hub and spoke.

In a mesh topology:

- all servers can replicate with each other. The topology is useful when data redundancy/failover is required.

- Avoid creation of R/W replicas (redundant copy) at the same location to avoid making conflicting changes and data loss since DFSR does not have a file locking mechanism. Workarounds are available like sharing only one instance of replica and publishing a single folder target etc, but it's still safer to configure Read-Only replica at the same site which can be initialized immediately with little downtime. Alternatively, file server cluster can be built and added to DFS namespace as a DFS folder target.

- If the same data needs to be available at two locations, data can be made available through DFS replication at both locations. Again, if data modification is happening on both sides, conflicts will happen due to replication latencies or open files, or if the same file is getting edited at both locations, then the file with the last edit will win. There is no built-in solution for that and you need to use 3rd party solutions like Peer lock

If 3rd party solutions are not allowed, then to avoid this situation, set up two-way DFS replication across both locations, but allow file modifications at only one side. This can be achieved via publishing a single DFS folder target per share folder pointing to a server at any one location. Again, performance issues can be encountered while accessing data over WAN

In hub and spoke topology:

- All spokes (Branch servers) can replicate with a Hub Server and vice versa but spokes cannot replicate with each other. If a Hub Server goes down, replication is halted until the Hub Server comes back online. Hence if multiple branch servers are there, they should be divided into two Hub Servers to minimize impact. Data collection should be done on the Hub Servers and from there it should be backed up

- If multiple replicated folders need to be configured as branch folders with single or dual hub servers, complete deployment in phased manner to avoid heavy load on hub server and chocking bandwidth

DFSN

Ideally, when deploying DFS namespace:

- A minimum of two servers should be deployed for high availability, load balancing, and redundancy. In a small or mid-sized environment, DFS-N and DFS-R both can be deployed on two servers

- An environment where thousands of folder targets are required under a DFS hierarchy, it’s advisable to separate DFS-N and DFS-R servers

- Use virtual servers as far as possible so that you can start with lesser resources, and then add resources if you want to later

- DFS-N can be installed on domain controllers to avoid separate hardware for namespace servers

- DFS name space referral settings should be configured as below at root name space level properties \ Referrals tab, so that it will be inherited by all subsequent name space folders

- Target referral ordering method should be "Lowest cost". It means targets in same AD site as the clients are listed 1st and targets outside local AD site would be listed alternatively as lowest to highest cost. reference article: Set the Ordering Method for Targets in Referrals

- Also "Clients fail back to preferred targets" should be selected so that clients can access an alternate folder target if preferred is not available and fail back to preferred target when it becomes available. Reference article: Enable or Disable Referrals and Client Failback

- Some good reading on DFS namespace - How many DFS-N namespace servers do you need?

Active Directory Health and AD Sites Configuration

Active Directory replication and name resolution must be working properly. DFS-N and DFS-R configuration data is stored under an AD domain partition and replicates among all domain controllers in that domain. The DFS server polls active directory periodically for updates. If any changes are made in DFS configuration on one server and not replicated to other DCs, another DFS server will not get those updates. This is especially true in case of DFS-R members working in remote sites.

If redundant folder targets need to be added to DFS name space folder to cover multiple locations, make sure that each location has a domain controller installed and AD sites and services are configured appropriately with local subnet mapping to localise resource access. Because DFSN locates folder targets in the same AD site as the client no matter how you configure target referral ordering.

If client subnet is mapped to the wrong site or did not get mapped to any site, DFS namespace referrals will provide the wrong target folder location which can create conflicting changes or if read-only replica gets referred as the folder target.

Source data permissions (DFSR)

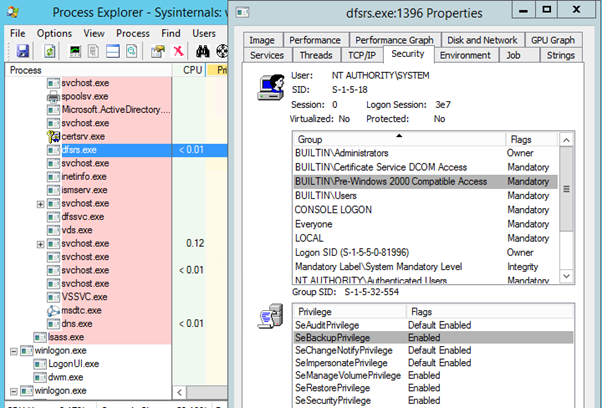

DFSR is not dependent on file system permissions. By default, the DFSR service runs under the NT Authority\System account, however, if we look through the process explorer, DFSR service also has SeBackupPrivilege and SeRestorePrivilege rights.

Those rights are equivalent to backup and restore files and directories user’s rights. This will enable DFSR to read and copy data from one location and write/paste at the destination location regardless of access rights on files and folders, except for open files.

Refer to the article below for a description of the above rights

https://docs.microsoft.com/en-in/windows/desktop/SecAuthZ/privilege-constants

If this is a brand new implementation without existing data. The root folder must be set with correct Share and NTFS permissions to avoid access violation issues for users and administrators. Root folder ownership should be granted to the built-in administrators' group. Grant Full control NTFS permissions to System account and built-in administrators group and remove creator owner group from root folder permissions

If you already have existing data (file shares) and already facing access issues, refer to my article below to correct those.

Windows File Server - Folder ownership problems and resolution

DFSR Staging Quota

DFSR uses staging quota to get files staged, calculate its hash and store it in the DFSR database and then sends files to the replicated member. Default staging quota limit is 4 GB, so it’s good to increase that limit as far as possible to avoid staging loop and to complete initial sync in time.

Later on, we can cut down staging quota limit if wanted to. Refer to the link below to calculate approximate staging quotas for a replicated folder.

OR

We can initially keep it as 20% of total drive capacity, increase if required further by monitoring backlog, this is based on the live environment I had managed previously

Number of replicated folders per drive

You should consider only one replicated folder per drive, this is useful in case you face any issues like heavy backlog or DFSR database corruption and need to clear out a DFSR database from system volume information. In this case, if two replicated folders are on the same drive and the issue exists with a single folder, DFSR Database will be wiped out for both folders and both folders would have to undergo initial replication which can take a considerable amount of time if the data size is large.

DFS-R Data Preseeding (pre-staging) and Cloning

If you are creating replication groups for existing data, or if you are adding a new replicated member to an existing replicated group, and if the data size is bigger (in TBs or in few hundred GBs), an initial sync can take a substantial amount of time to complete, due to the complexities involved in initial sync process.

The initial sync must be completed before data can be replicated back and forth and the process can take many days, depending upon the data size being synced. To save time, we can preseed data followed by DFSR database cloning.

Preseeding:

Preseed copy’s data (using the Robocopy tool) from the source server along with NTFS security to the destination server in advance. This process will save time to replicate the data over wire. However, you still need time for staging as at the DFSR stage, every file is copied with a preseeding and generated a hash of the file and store within the database for initial sync. This process is local to DFSR member. To save this staging time, we need to use database cloning further.

Note - The Preseed process uses the Robocopy tool and we need to ensure that we are using the most recent/updated version of Robocopy and ensure the same is installed on the source and destination servers to avoid hash miss matches (Check DFSR hotfixes URLs for Robocopy update)

Cloning:

The cloning process involves DFSR database export (clone) from the primary server and importing on the destination server. When you import a cloned database, DFSR validates hashes in the cloned database with local data (preseeded) on the destination server and once validated, the clone import completes successfully. This will cut down the need for stage and generate a hash for each file on the destination server.

Now when you actually add a member server to a replicated folder, since data along with hash values (database) are already present on both servers, the secondary server will only verify the database hashes with the primary server database hashes as part of the initial sync and once verified, the destination server can replicate data back and forth. The process is almost instantaneous or takes little time.

Refer excellent blog post below how to preseed DFSR data and clone DFSR database with a simple PowerShell interface.

Note:

- Cloning only works on Windows server 2012 R2 onwards

- DFSR database export (cloning) does not clone read-only replica databases, Read-Only replica must be set with classic initial sync process only.

- DFSR database export (cloning) will fail if a replicated folder is not fully converged, (i.e. replicated folder must be either completed initial build - event ID 4112, primary member OR must be completed initial sync - event ID 4104, Non Primary member), before exporting DFSR database ensure any one of events above are present in DFSR event logs for specified replicated folder

- Before cloning database, ensure that data preseeding is done on destination server with same folder name at source (Ex: D:\Corpdata at source must use <drive:\Corpdata at destination)

DFSR and roaming profiles

Roaming profiles replication through DFSR should be avoided as far as possible for the reason below.

The DFSR replication process remains slow for roaming profiles as DFSR does not support transactional replication (i.e. replicates all changes at once or replicates nothing). This increases backlog, might choke bandwidth if too many roaming users roaming in and around, so if data is changed by users frequently, it remains open or file handles are not able to be closed.

This leads to issues if a user switches between places and is logging on to a different roaming profile server. It might be possible that the server did not receive all updates from the previous server and the user might overwrite some data or may not get updated data. That type of behaviour complicates the situation more and can even corrupt profiles.

Still, if you want to deploy DFSR for roaming profiles, Microsoft has provided guidelines as below.

If DFSN and DFSR need to be used for a roaming profile to replicate across locations, we need to ensure that users are always connected to a roaming profile share on a single server, no matter if he is roaming in which location to avoid users from making conflicting edits on different servers. This can be achieved by keeping only a single folder target with a DFS namespace link used as a roaming profile path. However, this enforces the load roaming profile over WAN and slows down the loading and logoff process.

Microsoft Support Documents:

Excluding File types from DFSR (Filters)

- It is recommended to exclude certain file types which can remain open for most of the times (.pst, in addition to default .bak and .tmp for example) from DFSR as DFSR can’t replicate open files

- Avoid replicating mdb OR vhd / vhdx as these files are bulky files and DFSR can't replicate these files when in use. If users PST files are stored on a DFS replicated folder and since users connect to those PST files, DFSR cannot replicate those files until outlook is open OR If user machine is locked with outlook opened. This increases backlog pressure on DFSR and if backlog increased too much, it downgrades performance or can stop DFSR replication as well.

- When we enable filter for any file, folder or file extension, DFSR removes filtered files from its database and ignores any further changes to files and folders. It leaves filtered data as is in the replicated folder without modification.

Microsoft Reference: Removing DFSR Filters

RDC and DFSR

Remote differential compression is the algorithm used by DFSR with 2008 R2 and above servers by default. RDC replicates only delta changes in files in chunks/blocks, thus saving bandwidth, hence it's useful when replicating DFSR over WAN to conserve bandwidth.

However, it causes extra load on the server's CPU because RDC detects insertions, removals, and rearranged data in files, creating delta chunks. Note that RDC feature installation is not required on DFSR servers

We should disable RDC for DFSR groups connected with LAN having gigabit network cards and switches to save processing time and improve replication by replicating entire file in one shot.

More Explanatory Notes:

Disable replicated member vs delete replicated member

If you wanted to remove a member server from a two-way replicated folder, there are two options:

Simply disable the replicated member instead of removing it. This action will stop replication on the server for that specific replicated group. Now at any point if you need to enable replication, you can simply enable it for that server, after enabling, replication will force server to complete initial sync (one-way sync) so that even if you deleted any data from this server, it will get replicated/copied from the other partner as part of the initial sync process and once the initial sync is completed successfully (look for DFSR event ID 4104 on server being altered), replication will start both ways. This way you won’t lose any data.

However, if you deleted a replicated member from the DFSR group, deleting member will not delete the DFSR database on that member. This DFSR database keeps the deleted member for 60 days in Tombstone. If within 60 days you added the deleted server back in a replicated folder, DFSR considers that server as authoritative and start replicating both ways. If you have deleted any data from this member server in the meantime, before adding back to DFSR, the deletion will get replicated to the other server as well and data loss will occur.

Hence just disable member for replicated folder. After disabling replication if you decided to remove server permanently, you can do that any time

DFSR read-only replica

With 2008 R2 Microsoft has innovated Read-only replica. As the name suggests, it is read-only. Meaning replication is one way only, from R/W partner to R/O partner. This is useful when you specifically want to store data at a remote location as a kind of DR.

Once the folder is set as a read-only replica, no one can write/delete any data from read-only folder locally or remotely. R/O replicas are useful in the event your R/W DFSR server along with data failed and you needed to recover data.

We can change the Read-only replica to a read-write replica and vice versa. By doing so, the member being altered undergoes “Initial Sync” or also called as non-authoritative sync. Once the initial sync is completed, DFSR event ID 4104 gets triggered and now the server can either be R/W or R/O replica depending upon the action you have taken.

Microsoft Reference: Read-Only Replication in R2

The best use of R/O replica could be:

- One can create DFSR group with one R/W replica and one R/O replica at the same location

- Add DFS folder targets to only R/W replica under DFS namespace, so that no one can access to R/O replica, in fact, don't share R/O replica folder

- In the event of R/W replica fails permanently, convert R/O replica to R/W replica, share the folder and add a new folder target pointing to this replica and access will get restored quickly

- Later on, a new server can be added to the replicated group as a read-only replica

DFSR Replication Schedule and Bandwidth

- DFSR allows us to define the replication schedule and bandwidth. For WAN locations, initial replication should be allowed only during off business hours with full bandwidth.

- In the case of R/O replicas over WAN, we should schedule to replicate data during off business hours only with full bandwidth.

- Locations where replicated data needs to be available during business hours, we need to allow business hours as well, if limited network bandwidth is available, we need to throttle bandwidth

Antivirus Exclusions

Microsoft has recommended AV exclusions for DFS root shares and its contents (folder targets) etc. These exclusions are same as FRS / DFSR replicated Sysvol. The below article explains exclusions to be placed

Microsoft Reference: Virus scanning recommendations for Enterprise computers that are running currently supported versions of Windows

DFSN & DFSR Backup and Restore

In order to successfully restore DFS namespace and replication in case of accidental deletion, AD system state and below registries need to be backed up

- Active Directory System State - DFSN and DFSR both configurations are stored in active directory

- DFS name space configuration need to be exported with Dfsutil utility - dfsutil /root:\\domain.com\dfsnameSpace /export:C:\export\namespace.txt

- Shares registry on each DFS Namespace server for DFS Root Share - HKLM\SYSTEM\CurrentControlSet\Services\LanmanServer\Shares

- DFS namespace registry key on each server - HKLM\SOFTWARE\Microsoft\DFS

There are multiple ways available to restore DFS based on what backup you have.

I will discuss the actual restoration in another article.

Microsoft reference: Recovery process of a DFS Namespace in Windows 2003 and 2008 Server

I hope this Article will be helpful in building your DFS server Infrastructure.

If you liked this article, please click the Thumbs-Up icon below.

Have a question about something in this article? You can receive help directly from the article author. Sign up for a free trial to get started.

Comments (3)

Commented:

Thanks, Mahesh!

Commented:

Great article!

Is there any best practices regarding backup exclusions for the DFS-R?

I cant find any documentation at Microsoft, so.... best guess is its not recommend to exclude lets say "Staging/DfsrPrivate"

Best regards

Simon

Author

Commented:https://www.veritas.com/content/support/en_US/article.100038589

DFS-R is multi master replication engine and you should have multiple (at least) 2 servers, so that you don't ever need to backup dfsr database

Check below article: It excludes folder when you prestage DFS replicated folder

https://techcommunity.microsoft.com/t5/Storage-at-Microsoft/DFS-Replication-Initial-Sync-in-Windows-Server-2012-R2-Attack-of/ba-p/424877

If you feel that article is useful, please endorse it.

Mahesh.